This paper is focusing on the development of a system based on computer vision to estimate the movement of an MAV (X, Y, Z and yaw). The system integrates elements such as: a set of cameras, image filtering (physical and digital), and estimation of the position through the calibration of the system and the application of an algorithm based on experimentally found equations. The system represents a low cost alternative, both computational and economic, capable of estimating the position of an MAV with a significantly low error using a scale in millimeters, so that almost any type of camera available in the market can be used. This system was developed in order to offer an affordable form of research and development of new autonomous and intelligent systems for closed environments.

Publicaciones de Investigación

Abstract

The paper presents a descriptive research, whose objective is to analyze the influence that exists between five dimensional factors that have been studied in the attitude scale towards statistics, designed and validated by Auzmendi (1992), based on the answers given to the items for a sample of 219 students of military officer training schools. The main results indicate: in the exploratory analysis the average age of the interviewees is 21.3 years, 88.6% are men and 11.4% are women. The highest reliability in the factors occurs in Anxiety (0.896) and the lowest in Fun (0.740); The factor analysis extracts five dimensional factors that explain 66.362% of the total variance of the data considering its acceptance. Keywords: Attitude towards Statistics, Labor Camp, Military Regime, Training. 1.Introducción En el mundo de la Estadística se ha usado el concepto de actitud con una descripción que permite tratar la predisposición, con una cierta carga emocional, que influye en el comportamiento del ser humano.

Abstract

The present work corresponds to the development of a sample recollection system for the exteriors robotic platform belonging to the manufacturing laboratory. The main objective of the project is the implementation of a manipulator capable of solving an automated process using artificial vision provided by the Kinect device, which provides the necessary parameters such as, required deepness for object location, all this by using free software. Through the construction of an anthropomorphic arm with six degrees of freedom which was designed for the future integration into the project realized by Obando and Sánchez which is mobile platform, an embedded system for object recollection was obtained. Additionally, a graphic interface was implemented which allows the user to visualize the recognized objects giving the user the possibility to control the object recollection operation on a controlled environment, the objects to be taken must be located within a predetermine work area.

Abstract

This article consists of the study and analysis of the armor effectiveness of a military combat vehicle against possible attacks with electromagnetic weapons. For the study, it is necessary to use two important softwares namely ALTAIR FEKO and MATLAB. ALTAIR FEKO allows the creation or importation of a 3D model of an armor vehicle and work with the model changing their characteristics like relative permeability, electrical conductivity, and material thickness. With this software, we can simulate the 3D model and analyze its shielding effectiveness with the observation of induced currents inside the vehicle and determine if these currents can cause the destruction of the electronics systems of vehicle. The use of MATLAB toolboxes like an App Designer to develop an application to calculate the shielding effectiveness of an armored vehicle is an additional and quick option to calculate armor losses because this application represents a significative save of memory and computational resources.

Abstract

UGVs have been used to replace humans in high-risk tasks such as explore caverns, minefields or places contaminated with radiation but also, they are used to research path planners in order to replace human intervention to drive a car. UGVs can be controlled remotely from a safety place to avoid injuries, lethal damage and reduce fatal accidents. However, in order to acquire information about vehicle surroundings, it is necessary to use sensors or cameras that provide information to the remote operator in order to make the best decision of the vehicle course. Correspondingly, the information acquired by the sensors can be used to build an environment map, which will be useful for future applications, or to save a register of the explored area. The work proposed develop and build a vehicle with an Ackermann steering that can be controlled remotely by an operator using a portable computer, with the purpose of explore unknown environments using stereo vision cameras and build a map with information about the surroundings. projected.

Abstract

The present work of investigation presents a system of estimation of position and orientation based on algorithms of artificial vision and inertial data taken from the unit of inertial measurement incorporated in a smartphone device. The implemented system realizes the estimation of position and orientation in real time. An application was developed for android operating systems that allows capturing the images of the environment and executes the algorithms of artificial vision. In the implementation of the system, the detectors of feature points were tested, Harris, Shi-Tomasi, FAST and SIFT, with the objective of finding the detector that allows to have an optimized system so that it can be executed by the processor of a system embedded as are smartphones. To calculate the displacement of the camera adhered to a mobile agent, the optical flow method was implemented. Additionally, gyroscope data incorporated in the smartphone was used to estimate the orientation of the agent. The system incorporates a simulation of estimated movement within a three-dimensional environment that runs on a computer. The position and orientation data are sent from the smartphone to the computer wirelessly through a Wi-Fi connection. The three-dimensional environment is a digital version of the central block of the Universidad de la Fuerzas Armadas ESPE where the tests of the implemented system were carried out.

Abstract

This article presents a vision-based detection system for a micro-UAV, which has been implemented in parallel to an autonomous GPS-based mission. The research seeks to determine a value objective for decision-making within military reconnaissance operations. YOLO-based algorithms have been used in real-time, providing detection of people and vehicles while fulfilling an automated navigation mission. The project was implemented in the CICTE Military Applications Research Center, as part of an automatic takeoff, navigation, detection, and landing system. The detection based on YOLO V3 offers efficient results from the analysis of sensitivity and specificity in the detection in real-time, in external environments during autonomous navigation and while the recognition of the objective is carried out keeping the UAV in stationary mode, with different angles of the camera.

Abstract

A fundamental element for the determination of the position (pose) of an object is to be able to determine the rotation and translation of the same in space. Visual odometry is the process of determining the location and orientation of a camera by analyzing a sequence of images. The algorithm allowed tracing the trajectory of a body in an open environment by comparing the mapping of points of a sequence of images to determine the variation of translation or rotation. The use of Lane detection is proposed to feed back the Visual Odometry algorithm, allowing more robust results. The algorithm was programmed on OpenCV 3.0 in Python 2.7 and was run on Ubuntu 16.04. The algorithm allowed tracing the trajectory of a body in an open environment by comparing the mapping of points of a sequence of images to determine the variation of translation or rotation. With the satisfactory results obtained, the development of a computational platform capable of determining the position of a vehicle in the space for assistance in parking is projected.

Abstract

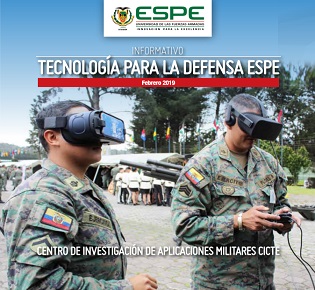

In this article, we proposed an augmented reality system that was developed in Unity-Vuforia. The system simulates a war environment using three-dimensional objects and audiovisual resources to create a real war conflict. Vuforia software makes use of the database for the creation of the target image and, in conjunction with the Unity video game engine resources, animation algorithms are developed and implemented in 3D objects. That is used at the hardware level are physical images and a camera of a mobile device that combined with the programming allows to visualize the interaction of the objects through the recognition and tracking of images, said algorithms are belonging to Vuforia. The system allows the user to interact with the physical field and the digital objects through the virtual button. To specify, the system was tested and designed for mobile devices that have the Android operating system as they show acceptable performance and easy integration of applications.

Abstract

Unmanned aircraft vehicles applications are directly related to payload installed. Electro-optical/infrared payload is required for law enforcement, traffic spotting, reconnaissance surveillance and target acquisition. A commercial off-the-shelf electro-optical/infrared camera is presented as a case study for the development of interface to control the UAV payload. Based on an architecture proposed, the interface shows the information from the sensor and combines data from UAV systems. The interface is validated in UAV flight tests. The software interface enhances the original performance of the camera with a fixed-point automatic tracking feature. Results of flight tests present the possibility to adapt the interface to implement electro-optical cameras in different aircrafts.

Abstract

In this article, we present the use of depth estimation in real time using the on-board camera in a micro-UAV through convolutional neuronal networks. The experiments and results of the implementation of the system in a micro-UAV are presented to verify the unsupervised model improvement with monocular cameras and the error regarding real model.

Abstract

This article will establish the physical design of a tetrapod robot, highlighting its own characteristics of low-level three-dimensional movement to move from one point to another. The navigation system was also examined in environments not defined from a top-down perspective, making the analysis and processing of the images with the purpose of avoiding collisions between the robot and static obstacles, and using probabilistic techniques and partial information on the environment, RRT generate paths that are less artificial.